The Future of Truth: Navigating a World of Generative AI

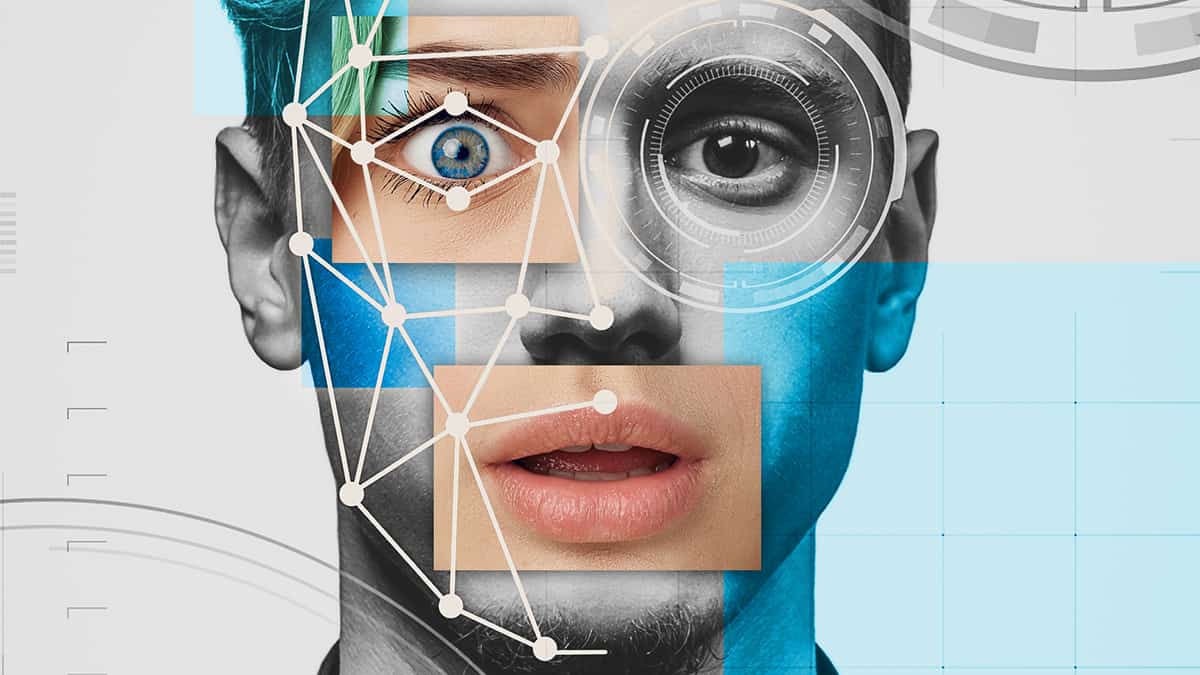

As technology keeps getting better at a rate that has never been seen before, the idea of what is true is becoming less clear. With the rise of generative artificial intelligence (AI), it is getting harder and harder to tell what is real and what is not. In this new world, the truth can’t be proven with as much certainty as it used to.

Generative AI can make anything a person can, from real-looking pictures and videos to complicated pieces of writing. Machine learning algorithms are used to power the technology. These algorithms are trained on huge amounts of data, which allows them to produce results that can’t be told apart from those made by humans.

This has far-reaching effects on how we think about truth. In the past, facts and evidence were used to figure out what was true. But in a world where generative AI can make anything it wants, it’s not clear what counts as evidence. For example, a fake video of a political figure made by AI could be used to spread fake news, making it hard to tell what is real and what isn’t.

Also, the fast growth of generative AI means that the spread of false information will only speed up. As AI gets better, it will be harder and harder for the average person to tell if the information they are given is real or not.

In the years to come, people will have to figure out how to live in this new world where truth is hard to define. Traditional ways of checking information are becoming less reliable, so it’s more important than ever to be skeptical of what we’re told and look for other ways to find the truth.

At the same time, governments, institutions, and tech companies need to take responsibility for how generative AI is changing the way we think about truth. This could mean that technology is regulated more strictly to stop the spread of false information and that new tools are made to help people check the validity of the information they are given.

Numbers tell the real story

Here are some numbers that highlight the rapid growth of AI and the spread of misinformation, which will only continue to increase as the technology becomes more advanced and widely adopted.

- AI market size: According to a report by MarketsandMarkets, the global AI market is expected to reach $339.8 billion by 2026, growing at a compound annual growth rate of 27.6% from 2021 to 2026.

- AI adoption: A survey by Gartner found that 75% of organizations have either implemented or plan to implement AI within the next three years.

- AI and fake news: A study by the Oxford Internet Institute found that fake news spreads six times faster than real news on Twitter. Additionally, research by MIT found that false information is 70% more likely to be retweeted than true information.

- AI and deepfakes: The market for deepfake technology is expected to grow from $78 million in 2020 to $2.6 billion by 2025, according to a report by Allied Market Research.

- Misinformation during COVID-19: The World Health Organization reported that there has been a surge in misinformation about COVID-19, with false information spreading rapidly on social media platforms.

Possible Solutions(?)

Here are some ideas for how to deal with the problems caused by the fast growth of AI and the spread of fake news:

- Media literacy: means giving people the knowledge and tools they need to recognize and judge the truth of the information they see and hear. This could be done through programs in schools to teach people how to use technology, public awareness campaigns, and easy-to-use tools for checking information.

- Regulation: Governments can set rules about how AI-generated content can be used, especially in political campaigns, to make sure it’s not used to spread false information. This could mean putting clear labels on content made by AI or limiting how much AI is used in political ads.

- Technical solutions: Creating new tools and technologies to find and fight misinformation, such as AI algorithms that can spot deepfakes or blockchain-based systems that can check if information is real.

- Technology companies: need to make sure that their platforms aren’t being used to spread false information. This could mean spending money on tools to find and stop false information and working with groups and experts to come up with the best ways to stop the spread of false information.

- International cooperation: is needed to deal with the problems caused by AI and false information, since the technology and the spread of false information don’t care about borders. Sharing best practices, making common standards, and working together to find solutions to these problems could all be part of international cooperation.

To put these solutions into action, the government, business, and society as a whole will need to work together and use a variety of methods. But we need to act now, before AI and fake news make the problems even harder to solve.

19,096 Comments